Google has officially announced a groundbreaking leap in the field of robotics and artificial intelligence with the debut of Gemini Robotics AI. Branded under the company’s renowned Gemini AI initiative, this new system is designed to bring powerful, multimodal AI capabilities directly onto robotic hardware — eliminating the need for cloud-based processing.

With this move, Google Launches Gemini Robotics AI to address the increasing demand for smarter, more responsive robots that can function independently in real-time environments.

Gemini Robotics AI is an advanced vision-language-action (VLA) model built to run entirely on-device. Unlike traditional AI models that require constant internet connectivity and cloud computation, this system allows robots to perceive, reason, and act all within their own hardware.

By integrating Google’s most sophisticated AI models directly into the robot’s core, the solution ensures faster response times, greater reliability, and enhanced privacy.

The timing of the announcement is critical, as the robotics industry increasingly moves toward edge computing. With sectors like healthcare, manufacturing, logistics, and consumer robotics demanding more autonomy and fewer latency issues, on-device AI is becoming essential.

According to a report by Allied Market Research, the global AI in robotics market is expected to reach over $64 billion by 2030, growing at a CAGR of 25.3%. This trend underscores the strategic importance of Google’s move.

By launching Gemini Robotics AI, Google positions itself at the forefront of this next-generation robotics wave, signaling a future where machines learn, adapt, and assist — all without relying on an internet connection.

This innovation doesn’t just mark a technical achievement; it sets a new benchmark for what intelligent, self-reliant robots can become in the real world.

How Google Launches Gemini Robotics AI – Key Innovations

In a bold move toward AI independence, Google Launches Gemini Robotics AI as a fully on-device solution—marking a significant departure from the cloud-dependent models that have long defined the robotics industry.

This next-gen platform integrates Google’s Gemini 1.5 model architecture into robotic systems, enabling them to see, understand, and act—all in real time and without relying on internet connectivity.

One of the standout innovations of Google Launches Gemini Robotics AI is its compact and highly efficient design. Unlike previous systems that relied heavily on external servers to process visual inputs and generate responses, Gemini Robotics AI is optimized to run directly on a robot’s onboard hardware.

Whether it’s a robotic arm like ALOHA or a humanoid machine such as Apollo, this AI can interpret complex language instructions, process vision inputs, and plan physical movements—all offline.

Google has achieved this by training Gemini Robotics AI on a vast dataset of robot demonstrations, paired with natural language and video inputs. This results in a Vision-Language-Action (VLA) model that’s capable of adapting to a variety of tasks and environments.

For example, Gemini AI demonstrated the ability to understand commands like “stack the blocks by color” or “pick up the red apple on the left” with minimal training, showcasing both accuracy and versatility.

The ability to run locally brings multiple advantages. It reduces latency, improves security and privacy, and eliminates dependency on network conditions—all of which are critical for applications in sensitive sectors such as healthcare, defense, and autonomous logistics.

Moreover, running on-device lowers operational costs by reducing bandwidth and server usage, which is particularly beneficial for large-scale robotic deployments.

With this innovation, Google Launches Gemini Robotics AI not just as a model, but as a new standard for robotics architecture. The ability to operate fully offline allows robots to function in environments where connectivity is limited or unavailable—rural warehouses, disaster zones, or even outer space.

As this technology rolls out to partners and developers, it promises to reshape how AI is integrated into physical machines, leading the charge into the future of autonomous robotics.

Also Read: Nothing Phone 3 Is Coming on July 1: Specs, Features & More

Demonstrations of Google Launches Gemini Robotics AI

To showcase its real-world capabilities, Google Launches Gemini Robotics AI through a series of impressive demonstrations that highlight its intelligence, adaptability, and precision. These tests were not just simulations — they were live-action tasks performed by different robots using Gemini AI running entirely on-device, without any cloud assistance.

One of the most talked-about demos featured a robotic system shooting a basketball into a hoop. This seemingly playful task required a remarkable degree of coordination between vision, decision-making, and motor control.

With Google Launches Gemini Robotics AI, the robot was able to analyze the basket’s position, calculate trajectory, and execute a successful shot — all using its local processing power. The entire sequence illustrated the system’s advanced understanding of space, object dynamics, and intent-based actions.

Another standout example was the ALOHA robotic arm, a research platform used to test fine-motor tasks. Equipped with Gemini Robotics AI, ALOHA successfully performed tasks such as folding a T-shirt, pouring liquids, stacking colored blocks, and even setting a dining table.

These aren’t just pre-programmed routines — they required real-time decision-making, flexible adaptation, and multi-step planning, all handled by the AI autonomously.

In addition, Franka FR3, a collaborative robot arm widely used in research labs, demonstrated Gemini AI’s capabilities in industrial-style precision work. The FR3 executed delicate object manipulation tasks, like picking up unfamiliar items and placing them accurately based on voice commands — again, all performed offline using the embedded Gemini model.

Perhaps the most visually striking demo came from Apollo, a humanoid robot. When powered by Gemini Robotics AI, Apollo was able to walk, identify objects, and follow complex verbal instructions like “walk to the table, pick up the water bottle, and place it on the shelf.”

This level of embodied reasoning, powered by AI, brings us one step closer to practical household and service robots.

By running these demos on three distinct robot platforms — the ALOHA arm, the Franka FR3, and the Apollo humanoid — Google Launches Gemini Robotics AI not only as a theoretical advancement but as a proven, versatile solution ready for multi-domain use.

These hands-on demonstrations provide tangible evidence of how this AI model can revolutionize the way robots interact with the physical world, from home assistance to manufacturing and beyond.

Also Read: Pixel 10 Series: Everything You Need to Know Ahead of the August 2025 Launch

Gemini Robotics-ER: Enhancing Embodied Reasoning

As part of its expanding robotics capabilities, Google Launches Gemini Robotics AI with a powerful new variant: Gemini Robotics-ER (Embodied Reasoning). This advanced model is specifically engineered to deepen a robot’s ability to understand its physical environment and interact with objects in a more human-like, intelligent way.

While traditional AI models struggle with the nuance of real-world physics and multi-step manipulation, Gemini-ER brings a new level of spatial understanding and motor planning to robotic systems.

At its core, Gemini Robotics-ER builds on the foundational architecture of Gemini but focuses on enhancing spatial reasoning, cause-effect understanding, and real-time adaptation.

This makes it exceptionally effective in tasks that require more than just object recognition — such as folding, stacking, sorting, and manipulating items the robot has never encountered before.

In one demonstration, a robot powered by Gemini-ER was able to fold a T-shirt neatly and place it into a storage box — a task that involves understanding fabric deformation, hand positioning, and step-by-step logic. In another task, the robot accurately stacked irregular-shaped blocks by analyzing their angles, weights, and colors, showcasing its advanced 3D modeling and prediction abilities.

The grasping of unfamiliar objects is another critical area where Gemini Robotics-ER shines. Robots traditionally rely on predefined parameters for object handling, but with Google Launches Gemini Robotics AI, the ER variant allows robots to visually examine a new object and determine how to pick it up safely and effectively.

This includes adapting to slippery, soft, or fragile items without prior training data — an ability once thought to be exclusive to human dexterity.

Gemini-ER’s strength lies in its real-world learning efficiency. Unlike earlier models that needed thousands of training examples, Gemini-ER performs these complex actions using fewer than 100 demonstrations. This low-data requirement is a game changer, enabling rapid deployment and adaptability across industries.

By integrating Gemini Robotics-ER, Google Launches Gemini Robotics AI not just as an intelligent assistant but as a physical problem solver — capable of functioning in warehouses, homes, healthcare facilities, and even disaster recovery zones where quick and safe object manipulation is essential.

Also Read: HP EliteBook X Flip G1i Review You Should Know Before Buying

Performance and Adaptability of Google Launches Gemini Robotics AI

A major breakthrough behind the buzz as Google Launches Gemini Robotics AI is its exceptional performance and adaptability — achieved with minimal training data. In rigorous real-world tests, the Gemini Robotics AI model has demonstrated a task success rate exceeding 90% using fewer than 100 human-annotated demonstrations.

This is a remarkable improvement over previous AI systems that required thousands of examples to reach similar reliability.

This efficiency is a direct result of Google’s integration of multimodal learning — combining visual perception, natural language processing, and motion planning in a single, compact on-device model.

Instead of relying on massive cloud datasets and high-latency feedback loops, Gemini Robotics AI can observe, learn, and apply knowledge almost instantly, allowing robots to adapt to new tasks and environments quickly.

One of the most compelling aspects is that Google Launches Gemini Robotics AI with accuracy comparable to, or in some cases better than, traditional cloud-based models — but without the need for constant internet access.

In trials involving robotic arms performing kitchen tasks, Gemini Robotics AI achieved near-human efficiency in stacking, pouring, and cleaning—all with zero cloud calls.

Further evaluations have shown that the AI model can generalize to new instructions, such as “clean the cluttered table” or “group the toys by color,” even when phrased differently than how it was originally trained.

This demonstrates not just high performance, but true language and task adaptability, an essential quality for robots expected to operate in unstructured, human-centered environments.

Another strength lies in its hardware flexibility. Whether running on a lightweight robotic arm like ALOHA or a full humanoid robot like Apollo, Gemini adapts seamlessly to various mechanical platforms and sensor arrays.

This portability ensures that the same intelligence core can be used across industries — from warehouse automation to eldercare — with minimal system adjustments.

By launching this adaptable and performance-rich model, Google Launches Gemini Robotics AI as more than a lab prototype — it’s a deployable AI solution poised to accelerate real-world robotics innovation across the globe.

Also Read: Nothing Phone 3 Launching This July: Everything We Know So Far

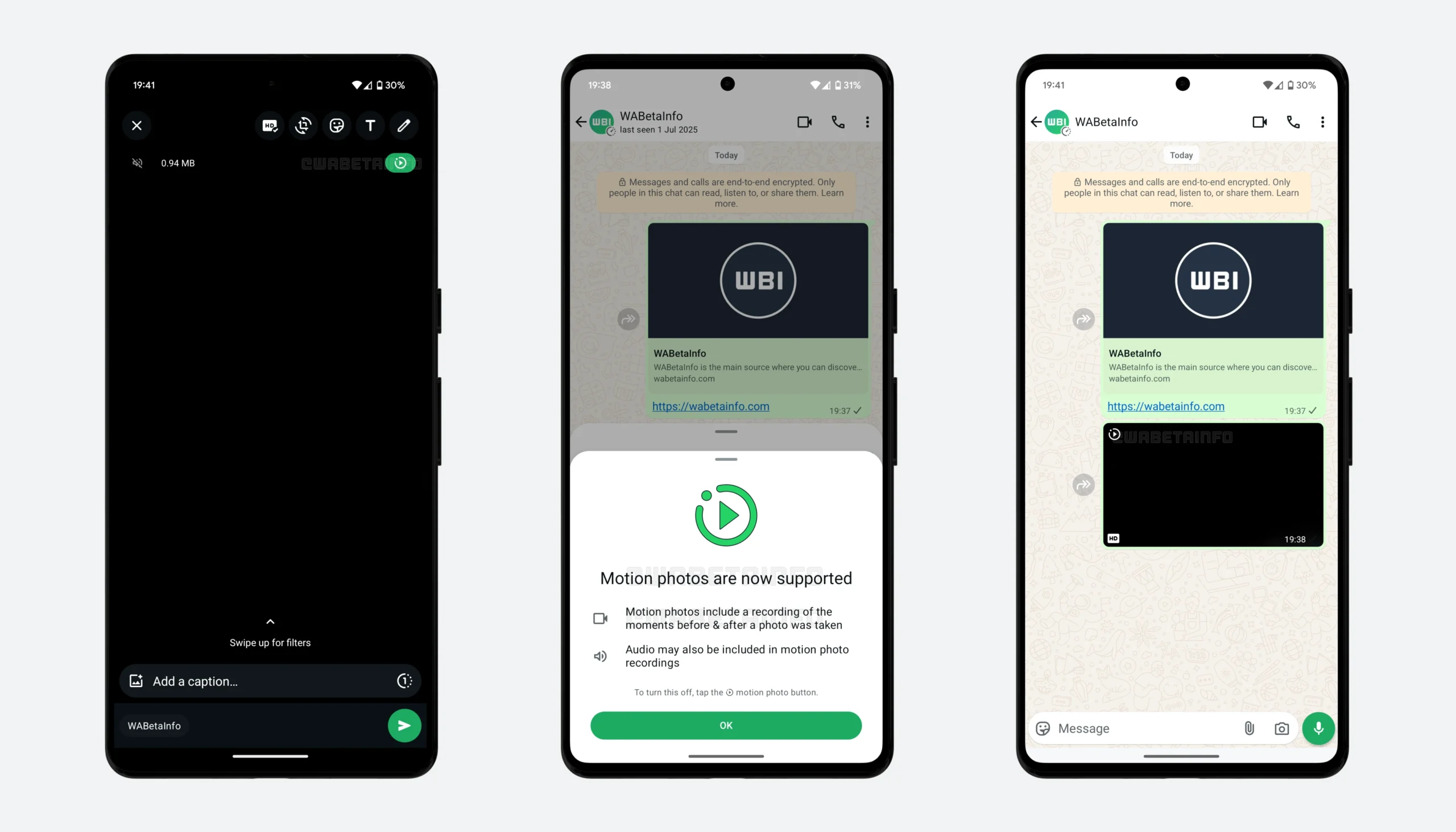

SDK & Developer Access: Google Launches Gemini Robotics AI

With the unveiling of its groundbreaking on-device AI model, Google Launches Gemini Robotics AI not only as a research breakthrough but as a practical tool for the developer community.

To support real-world adoption, Google has initiated a limited rollout of the Gemini Robotics SDK to select partners, robotics companies, and academic research labs around the world.

This Software Development Kit (SDK) includes tools, libraries, and APIs that enable developers to integrate Gemini AI capabilities into their own robotic systems. While still in early access, the SDK supports a wide range of platforms — from robotic arms and mobile robots to humanoid and industrial machines.

The goal is to encourage experimentation and refine the model through feedback and real-world testing.

By making the SDK available, Google Launches Gemini Robotics AI into an ecosystem that fosters collaboration and innovation. Early adopters can test the AI in dynamic environments like smart warehouses, manufacturing lines, eldercare facilities, and autonomous delivery robots — settings that demand both reliable performance and real-time decision-making.

Importantly, the Gemini SDK is designed for cross-platform integration, supporting popular robotics frameworks such as ROS (Robot Operating System), as well as custom firmware used in proprietary machines. This allows developers to work within familiar environments while taking full advantage of Gemini’s powerful vision-language-action capabilities.

One of the most exciting possibilities is that with SDK access, developers can build new applications for robotic automation, such as voice-controlled home assistants, autonomous shop floor operators, and even robotic companions with advanced understanding of their physical surroundings.

Although currently limited in availability, Google has hinted that broader access may follow as testing scales and partner feedback matures. This measured rollout ensures performance stability, security, and optimization before full public release.

By opening its toolkit to the robotics community, Google Launches Gemini Robotics AI not just as a finished product but as a platform that will evolve — fueled by developers who will stretch its limits, refine its use cases, and expand the next generation of smart robotics.

Safety and Ethical Considerations

As Google Launches Gemini Robotics AI, one of the most critical elements integrated into its design is a strong framework for safety and ethics. With robotics moving closer to everyday human interaction — whether at home, in healthcare, or on the factory floor — ensuring machines act responsibly and predictably is paramount.

Google has addressed this through a blend of built-in safety filters, ethical protocols, and intelligent decision checks.

At the core of the safety system lies a set of “robot constitution protocols” — a layered set of rules and constraints that govern what the AI can and cannot do. These protocols are modeled to reflect real-world ethical priorities such as preventing harm, ensuring user consent, and respecting privacy.

This means that if a robot encounters an instruction that could be dangerous or invasive (like handling sharp objects near a human), Gemini AI is programmed to either pause for clarification or refuse the task outright.

Complementing this are multilayered decision-check mechanisms. Before executing any physical action — such as moving toward a person, lifting an object, or using a tool — Gemini Robotics AI runs a rapid internal validation process.

This includes environmental scans, context verification, and outcome prediction to ensure the action aligns with safety standards. These checks are executed in milliseconds, thanks to the model’s on-device architecture.

Google has also embedded bias mitigation and ethical reasoning filters within the language and perception models. These prevent the AI from misinterpreting instructions or drawing on harmful stereotypes when processing visual or verbal input — a known challenge in large language models and computer vision systems.

Additionally, Google Launches Gemini Robotics AI with support for third-party oversight. Developers using the SDK can integrate custom ethical constraints specific to their environments, such as compliance with medical, manufacturing, or educational standards.

Importantly, these safeguards are not just theoretical. In testing environments, Gemini-powered robots underwent thousands of simulated and real-world trials where they had to choose safer or more efficient alternatives to ambiguous commands — such as using a cloth instead of a knife to open a package or waiting for a user to move before proceeding with a task.

As robotics becomes more integrated into human spaces, these robust ethical frameworks are not optional — they are essential. By embedding them directly into Gemini AI, Google Launches Gemini Robotics AI as not only smart and capable but fundamentally responsible and trustworthy.

Industry Impact & Future Outlook

The moment Google Launches Gemini Robotics AI, it sends ripples across the entire robotics and artificial intelligence industry. By successfully combining real-time on-device intelligence with high adaptability and ethical reasoning, Google has not only set a new technical benchmark but also reshaped the direction for future robotic systems.

One of the biggest impacts is on AI robotics standards. Traditionally, robotic systems have relied on cloud infrastructure, introducing latency, privacy concerns, and scalability issues.

Gemini’s model proves that advanced reasoning, multimodal perception, and natural language understanding can all operate offline — changing how developers, manufacturers, and research institutions approach robot design and deployment.

We may soon see industry standards shift toward more autonomous, private, and responsive on-device AI solutions.

This move also influences educational and industrial robotics platforms. With Google Launches Gemini Robotics AI into the spotlight, academic institutions are likely to revisit their curriculum and research focus to align with on-device AI.

Meanwhile, startups and large enterprises may feel encouraged (or pressured) to abandon traditional cloud-first models and pursue compact, real-time AI agents that are safer, faster, and more cost-efficient.

In terms of competitive positioning, Google now stands toe-to-toe with major players like Tesla (Optimus robot), NVIDIA (Isaac Sim & robotics stack), and OpenAI-backed efforts in embodied intelligence. Gemini Robotics AI’s unique blend of vision, language, reasoning, and motion planning all within a lightweight footprint gives Google an edge — especially in environments where privacy, bandwidth, or latency are critical constraints.

Furthermore, by releasing an SDK, even in a limited capacity, Google Launches Gemini Robotics AI not as a closed product but a scalable ecosystem, potentially positioning itself as the “Android of Robotics” — a foundational platform that others build on. This could accelerate partnerships with robotics manufacturers, healthcare providers, logistics companies, and even space agencies.

Looking ahead, the future of Gemini AI could involve integration with Google’s broader ecosystem — from Android and Wear OS to Google Home and Pixel devices — enabling a seamless ambient computing experience where AI-powered robots work intuitively within everyday life.

In short, Google Launches Gemini Robotics AI not just as a technical marvel, but as a strategic leap that may redefine how society interacts with robots — faster, safer, and smarter than ever before.

Conclusion – Why Google Launches Gemini Robotics AI Matters

With Google Launches Gemini Robotics AI, the tech giant has taken a pivotal step toward redefining what is possible in human-robot interaction. From its groundbreaking on-device architecture to its ability to perform real-world tasks like stacking, folding, and even playing basketball — Gemini AI represents a leap forward not just in technology, but in practicality and accessibility.

The integration of advanced spatial reasoning through Gemini-ER, real-time decision checks, compact processing, and ethical safeguards shows how serious Google is about building robots that are not only capable but trustworthy and context-aware. The performance benchmarks are equally impressive: achieving high success rates with fewer than 100 demonstrations, and competing with — or even outperforming — traditional cloud-based systems in many scenarios.

Perhaps even more transformative is the release of a limited-access SDK, which allows developers and researchers to explore the full potential of this system. Whether it’s for assistive care, smart manufacturing, home automation, or educational tools, the applications of Gemini Robotics AI are vast — and just beginning to unfold.

In a world increasingly shaped by automation and intelligent systems, Google Launches Gemini Robotics AI as a responsible, scalable, and forward-looking solution. It lays the foundation for a future where robots are not only more helpful but also more human-aligned — responding to our voices, understanding our environments, and adapting to our needs in real time.

As we move toward that future, one thing is clear: Gemini Robotics AI is not just another innovation — it’s a catalyst for the next era of robotics, where artificial intelligence finally meets the practical world with precision, purpose, and empathy.